ICCV 21' :Adversarial Attacks Are Reversible With Natural Supervision

Abstract

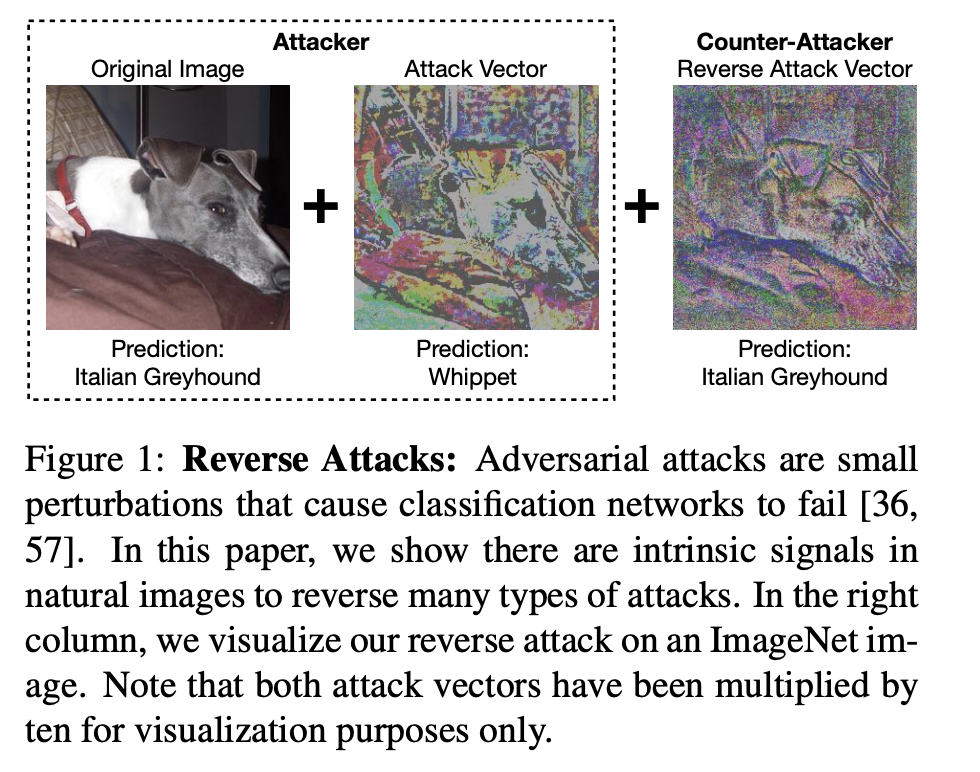

We find that images contain intrinsic structure that enables the reversal of many adversarial attacks. Attack vectors cause not only image classifiers to fail, but also collaterally disrupt incidental structure in the image. We demonstrate that modifying the attacked image to restore the natural structure will reverse many types of attacks, providing a defense.

Background

In the past, the defense of adv attack was focused on adv training, but training-based defenses do not face well the different characteristics of each kind of attack.

Training-based defenses cannot adapt to the individual characteristics of each attack at testing-time

Hypothesis

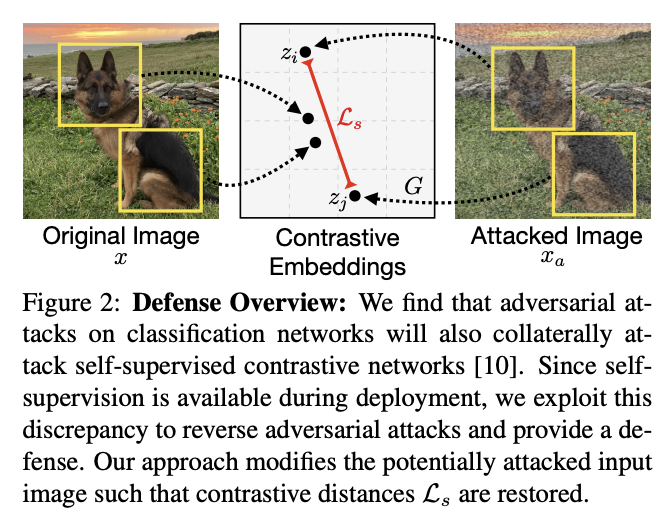

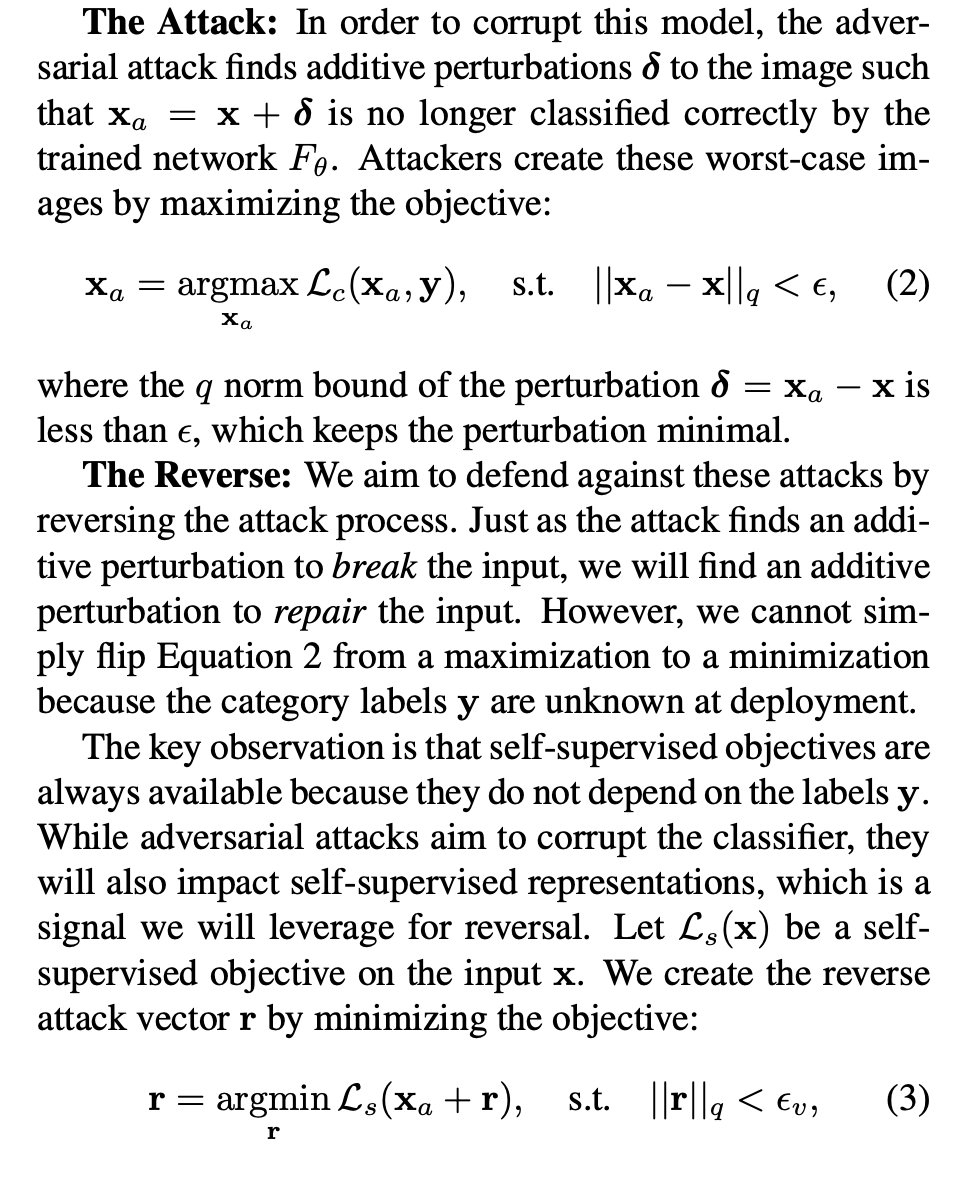

The authors argue that after adversarial perturbation of an image, the structure of the original image is changed. Adv attack therefore not only changes the result of the classifier, but also changes the Representation of the image at the same time.

By training a self-supervised contrastive network, the authors found that the performance of adv attack to change the representation was reflected in a significant change in the domain of the contrastive embedding.

Solution

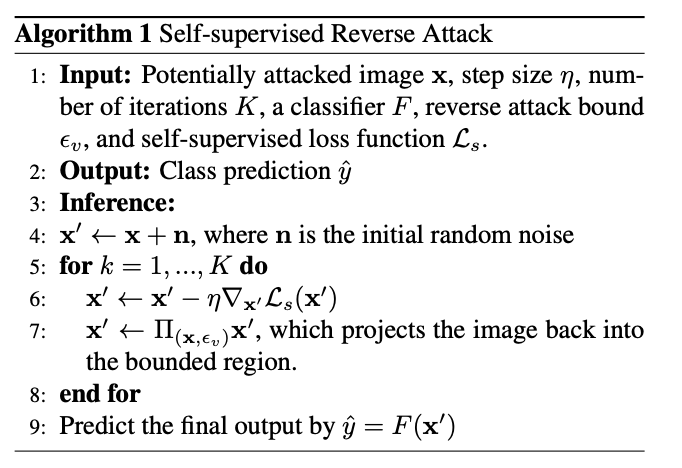

Using self-contrastive loss, for each potentially attacked input, a reverse adversarial attack is performed on it, thus recovering the attacked image.

Experiments