NeurIPS 21‘: Unadversarial Examples: Designing Objects for Robust Vision

We study a class of computer vision settings wherein one can modify the design of the objects being recognized. We develop a framework that leverages this capability—and deep networks’ unusual sensitivity to input perturbations—to design “robust objects,” i.e., objects that are explicitly optimized to be confidently classified. Our framework yields improved performance on standard benchmarks, a simulated robotics environment, and physical-world experiments.

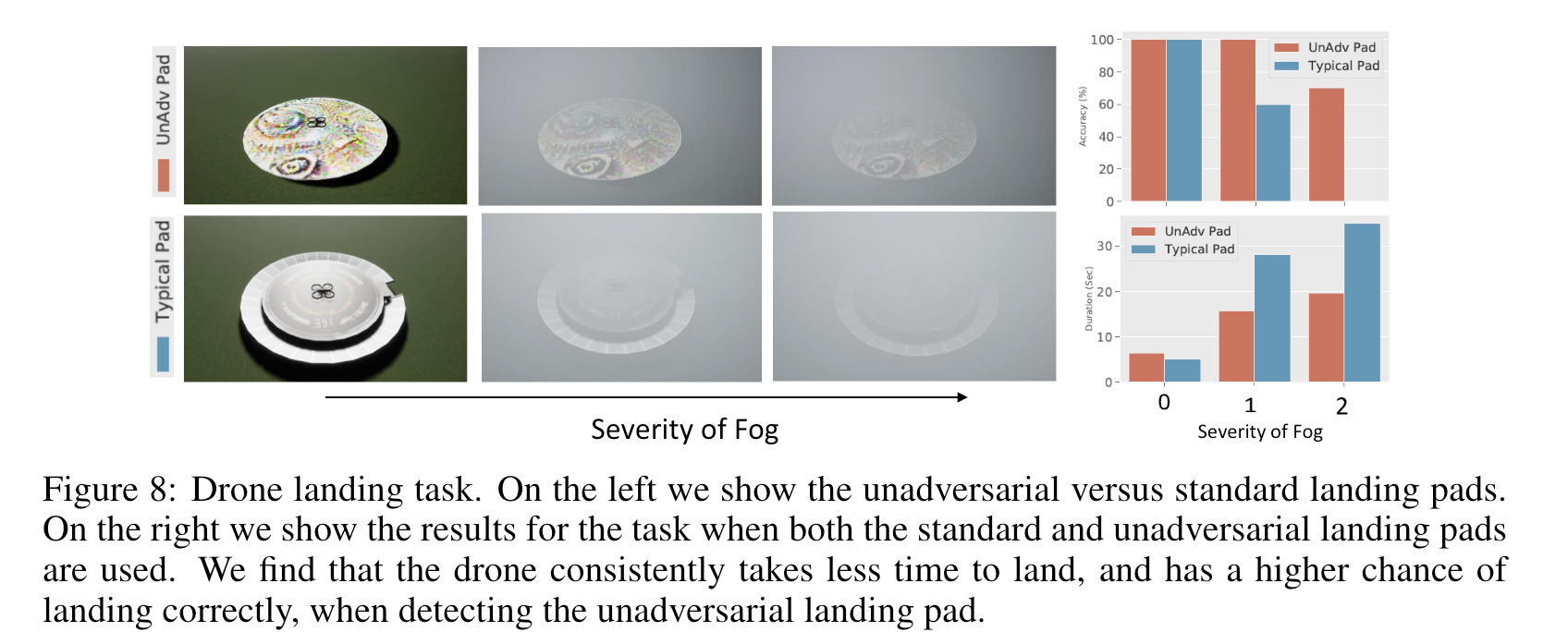

Figure 1: We demonstrate that optimizing objects (e.g., the pictured jet) for pre-trained neural networks can boost performance and robustness on computer vision tasks. Here, we show an example of classifying an unadversarial jet and a standard jet using a pretrained ImageNet model. The model correctly classifies the unadversarial jet even under bad weather conditions (e.g., foggy or dusty), whereas it fails to correctly classify the standard jet.

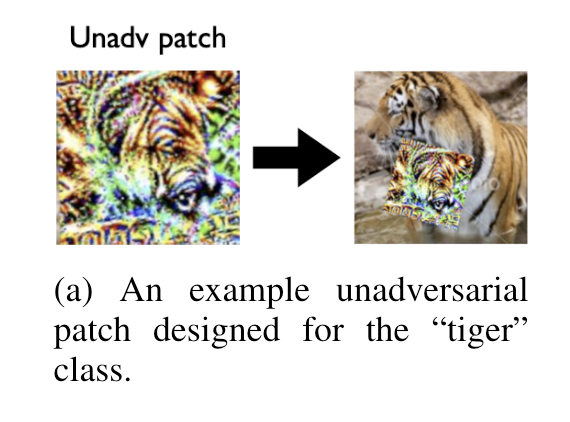

Pipeline: Unadversarial patches/stickers

optimize a sticker

- Initiate patches <-> classification labels

- At each iteration, perturb the sticker attached to image, compute the gradient of the loss with respect to pixels in the sticker.

Pipeline: Unadversarial textures

requires a set of 3D meshes for each class of objects, and a set of background env for simulate a scene.

- For each 3D mesh, our goal is to optimize a 2D texture which improves classifier performance when mapped onto the mesh.

- At each iteration, we sample a mesh and a random background;

- we then use a 3D renderer (Mitsuba [NVZ+19]) to map the corresponding texture onto the mesh.

- Renderering is non-differentiable, we use a linear approximation of the rendering process

- We overlay the rendering onto a random background image, and then feed the resulting composed image into the pre-trained classifier, with the label being that of the sampled 3D mesh. Compute (this time approximate) gradients of the model’s loss with respect to the utilized texture.

Eval:

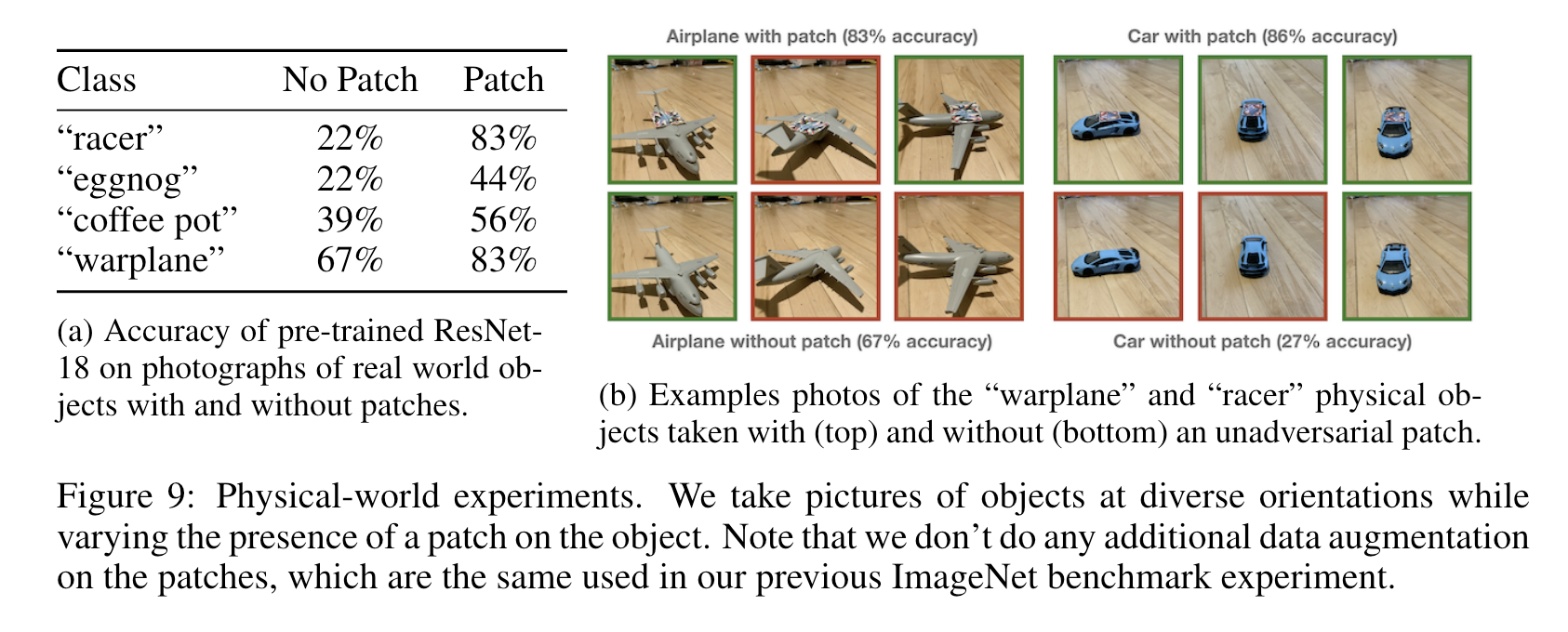

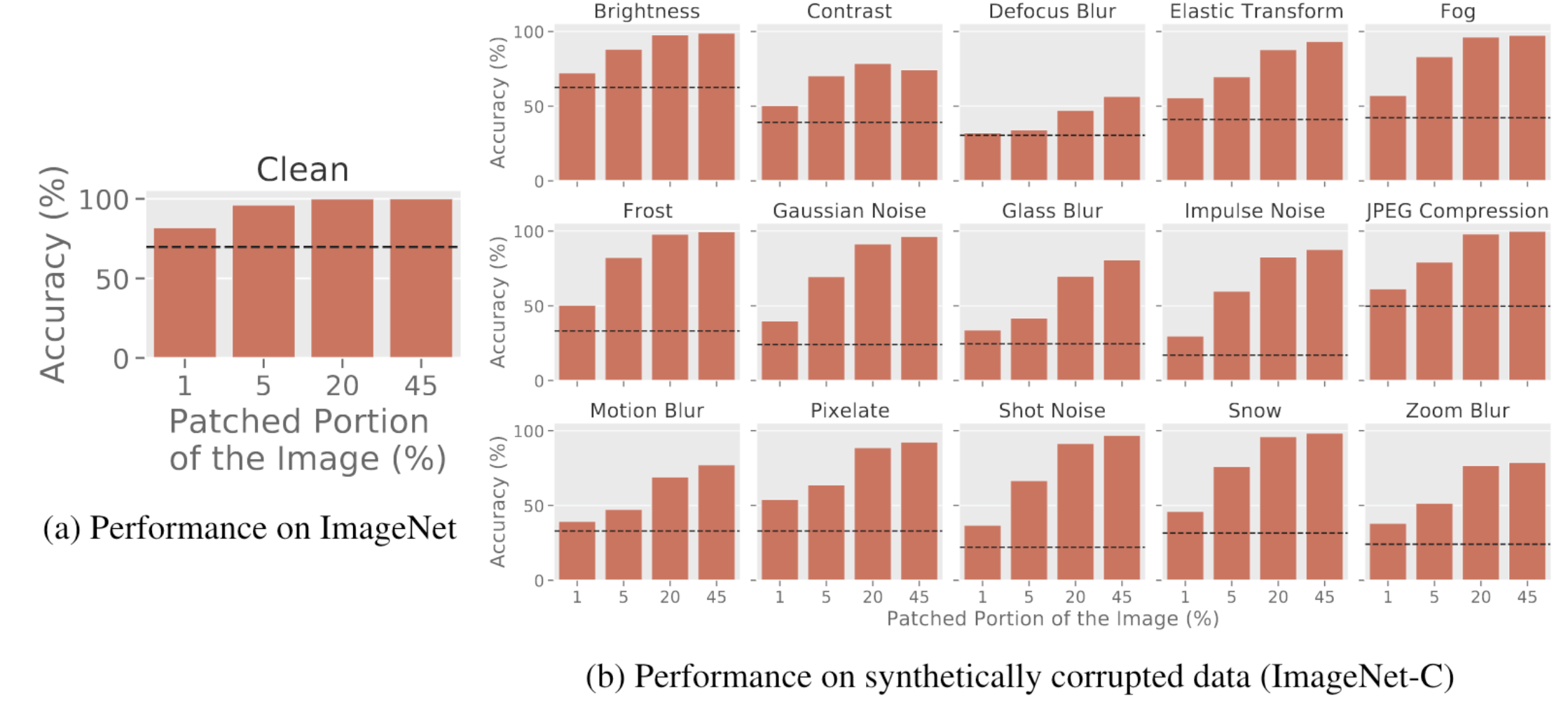

Patches: Apply unadv to corrupted dataset(ImageNet-C)

Textures: Render adverse weather conditions

Real-World: Printed stickers